Even when a presidential race is close, you expect some polls to be outliers. I asked ChatGPT to imagine a population that was evenly divided 50/50 between two candidates, and simulate 30 polls of one thousand voters each chosen at random. Here are the results.

As you can see, very few polls give you a result that is exactly 50/50. Across the 30 polls, the results range from a Republican lead of 3.4 percentage points to a Democratic lead of 4.2. Most come close to the mean. The 25th percentile result for Democrats shows them at 49.3%, compared to 50.8% at the 75th percentile. The standard deviation of Democratic vote share across simulations is 1.73%.

Something like this is what you see if you use the exact same methodology time and time again and we know we are getting a perfectly representative sample. In real life, pollsters have to make assumptions about things like who is likely to turn out, and also vary regarding their methods of reaching out to voters and how they weigh their responses. The mood of the electorate also changes, even across a short period of time. So if pollsters are doing their jobs well, we see much more variation across results than we do in the simulation above. The distribution of polls should look a lot messier.

One worry we have in polling is called “herding.” Firms become afraid to go out on the limb and report outliers because they don’t want to be embarrassed if they’re wrong. If you’re going to be wrong, it’s best to be wrong in the same way as everyone else, rather than get all kinds of attention for delivering an unexpected result and then being the only one to screw up the election. Now, this might create incentives to seek out outlier results because in that case you can be the only one correct, but this is a high-risk/high reward strategy. Rasmussen and Trafalgar seem to always just be more GOP leaning than the herd. This worked out well in 2016 and 2020, but made them look foolish in 2018.

Anecdotally it seems like most polling organizations are risk averse. Not reporting outliers means that you just add more polls to the conventional wisdom that are all saying the same thing, making outliers even less likely to appear. Eventually, they aren’t providing much information at all, since everyone’s results depend on the average of what the rest of the herd is doing.

Below are the distributions of national polls from 2016, 2020, and 2024, only including those that both began and concluded between October 10 and October 25. Only polls that have been posted to 538 as of this writing are included. If a pollster shows more than one poll covering the same time period, I use likely voter over registered voter results, and those with more than two candidates over those that show only results for Trump and the Democratic candidate. If a pollster had two polls that were similar on those criteria, I took the average and rounded up to the next whole number.

As we can see, 2016 looks like a very nice bell curve. The polls run from Trump +3 to Hillary +15, for a gap of 18 points. In 2020 and 2024, the ranges are 12 and 10 respectively. It looks like pollsters started herding in 2020, and things have if anything gotten worse this year. Except for one poll by BigVillage that shows Harris +7, every other national result in 2024 is between Trump +2 and Harris +4. Not bad if you’re running simulations, but probably too close together if pollsters are doing their job properly in the real world.

Let’s do the same analysis for Pennsylvania, which is thought to be the most likely tipping point state.

Once again, 2024 has a lower standard deviation. In 2020, Pennsylvania polls ranged from Trump +3 to Biden +10. In 2024 it goes from Trump +3 to Harris +1, albeit with fewer polls.

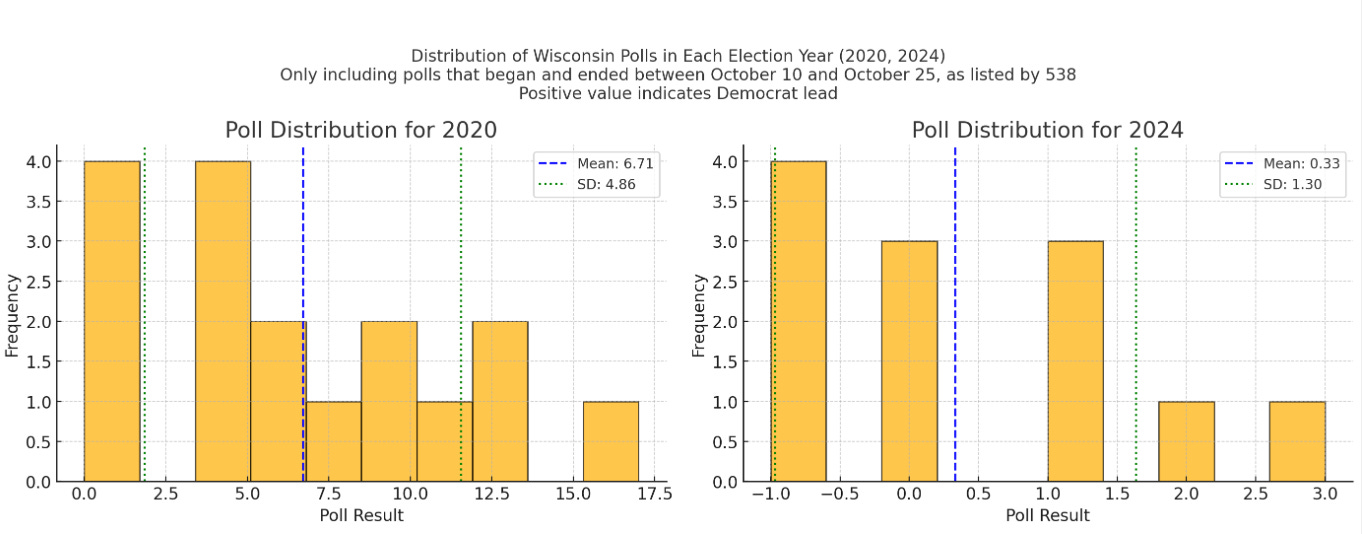

Finally, here is the same analysis for Wisconsin, in 2020 and 2024.

Oh come on! 2024 has 12 polls, and each is between Trump +1 and Harris +3. Meanwhile, the 2020 results are all over the place.

All of this indicates that pollsters were a lot more independent minded in 2016 than they have been in subsequent years. At the national level, 2020 and 2024 are about equally bad, while in Pennsylvania and Wisconsin this election cycle seems much worse than before. I’ll leave to others analysis of other swing states.

Here’s what the front page of the New York Times looks like right now.

I simply don’t believe that six out of seven swing states will be decided by one point or less.

One obvious reason we might be seeing herding is that pollsters underestimated Trump in both 2016 and 2020, and they don’t want to do so again. Because the last two elections were close, this time they might guess the safest thing to do is just to assume it will be a 50/50 race.

What are the implications of all this for what’s going to happen next week? It’s difficult to know. All of the pollsters assuming that the race is close might be obscuring the mood of an electorate that is actually tilting one way or the other, meaning either Trump or Harris is going to walk away with a clear victory. For all we know, firms might be right and the race is as close as it looks. Regardless, when polls all look this similar, any new ones are probably not adding all that much value.

I'm not in politics but I am in an industry in which prognostication is huge, and I can tell you this flat-out: clients will pay huge amounts on the latest, cutting-edge models, and react with confusion and disappointment if they deviate even slightly from averages and consensuses. I'm halfway convinced that you could slap a nice skin on a simple average of polls, say it has all the latest machine learning technology, and people would line up for it.

I think the lack of outliers is less about being shook from Trump’s 16/20 overperformance and more about this election being genuinely close.

In 2016/20 the herd was gathered in Safe D territory, so even a significant deviation from the herd in either direction would likely still show a Dem victory and the poll would remain correct in terms of forecasting the winner, thus limiting the potential for embarrassment.

Publishing a +12 Biden poll when Biden may win by 5? Who cares we’ll still get it right. Publishing a +5 Kamala poll when Trump may win by 2? Hell no we’re not publishing that, if we’re wrong we’ll get crucified.