In the 2016 election, over 136 million votes were cast for president. Of those, 65,853,514 (48.2%) went for Hillary Clinton, compared to 62,984,828 (46.1%) for Trump.

In other words, Hillary Clinton received 2.1% more of the vote. According to the last FiveThirtyEight average, polls had Hillary leading by 3.9% going into Election Day.

The narrative, of course, became that the polls “got it wrong,” because people think in binary terms. Headlines regularly highlight individuals who “called” the 2016 election, as if they have some special insight we should all listen to. By this logic, a pollster who forecasted that Trump would win by 30% was better at his job than one who had Hillary + 2, the actual result.

The educated public has become acutely aware of many failures by so-called experts in recent years. Over the course of the COVID-19 crisis, epidemiologists have incorrectly advised people not to wear masks and many seem to support or condemn protests based on their political content. Phil Tetlock famously showed that geopolitical experts were not noticeably better at forecasting than educated laymen, and the replication crisis has made many wonder how much anything published in psychology can be trusted.

Yet it’s also worth talking and thinking about when science goes right, because success has as much to teach us as failure. Election polls usually have somewhere around 1,000 respondents. In 2016, by taking the average of those polls, experts were able to predict the results of 136 million votes cast within 2%. Truly remarkable!

Much more so when you consider that many voters broke towards Trump at the last moment, which could explain why the polls were “off” by 1.8%. In 2016, about 12.5% made up their minds on Election Day itself.

How well would you do if you were forced to predict election results based on gut intuition, what the media was saying, or talking to people you know? Most people would surely be off by much more than 2%. To be able to look at polls that talk to 1,000 people, and get a ballpark estimate of what the outcome will be in an election with 136 million votes cast is quite amazing, and polling firms should be given credit for it. You wouldn’t necessarily predict such a thing was possible, and my intuitions have a hard time accepting it, even though I’ve seen the results.

In 2016, the polls were actually better than their historical average. Since 1972, pollsters have been within an average of 4.1% of the actual election results.

Of course, American presidential elections are determined by the electoral college, and thus the narrative developed that polls were “wrong.” It must be said that few were thinking about the electoral college in 2016, as most of the time the popular vote winner ends up becoming president. Among the people who “called 2016” for Trump, most were actually saying he’d win the popular vote, taking the popular vote as indicative of who would win the presidency. As far as I know, nobody was saying “the polls are basically right and Hillary will get more votes, but they’ll be slightly off in three midwestern states and Trump will become president.” Rather, partisans were engaging in wishful thinking, and declared victory after they got lucky.

Why Does Polling Work So Well?

We get a great deal of analysis when science goes wrong, and this can be valuable, as it tells us what not to do. By the same logic, when one group of people is doing particularly well, it is worthwhile to go in and understand why.

Polling has two qualities that make it possible to develop real expertise.

Objective criteria for judging performance.

Reputations tied to being correct (skin in the game).

Number 1 is simple enough. Without objective feedback, we cannot know who is right or wrong. I think American regime change wars in Iraq and Libya have been disasters, but supporters of those wars still dream up nightmare scenarios about what would have happened if the U.S. did not intervene, like they did before military action was taken.

Rasmussen, the favorite pollster of Republicans, became a laughing stock in the 2018 midterms when it performed worse than any other company. As Harry Enten writes,

Rasmussen's final poll was the least accurate of any of the 32 polls. They had the Republicans ahead nationally by one point. Democrats are currently winning the national House vote by 8.6 points. That's an error of nearly 10 points.

Of course, it's possible for any pollster to have one inaccurate poll. Fortunately, for statistical purposes, Rasmussen released three generic ballot polls in the final three weeks of the 2018 campaign.

The average Rasmussen poll had Democrats ahead by 1.7 points on the generic ballot. That's an underestimation of their eventual position of nearly 7 points. This made Rasmussen's average poll more inaccurate than any other pollster.

Looking at all pollsters, the average poll hit the mark nearly perfectly. The average gold standard poll (i.e. ones that use live interviews, calls cell phones and is transparent about its practices) over the final three weeks of the campaign had Democrats ahead by 9.4 points on the generic congressional ballot. That's an error of less than a point. The average pollster overall, according to the FiveThirtyEight aggregate, had Democrats up by 8.7 points. Once all the votes are counted, that could have hit the nail on the head.

Rasmussen is known for consistently giving President Trump a higher approval rating than other pollsters. Yet their Trump-Biden polls show results that are consistent with the general trend. Why the disparity? I would guess that the company has learned from 2018, and realized that if this continues to happen it will no longer be seen as a reputable pollster. We will eventually find out who voters prefer between Trump and Biden. But we’ll never have a national election that will determine Trump’s approval rating once and for all. So Rasmussen agrees with other pollsters on the things that it can be judged by, but disagrees with them and tells Republicans what they want to hear when there is no objective standard by which it will be judged.

In 2012, I had a friend who was sure Romney was going to win the election. He told me that, although polls showed a close race, this was because pollsters didn’t call cell phone numbers, and for some reason that created a bias towards Obama. I didn’t listen very closely, but assumed that pollsters had already thought about the “cell phone problem” more than he had, if it even existed. Knowing that he was a Republican reinforced this view, and I didn’t listen very closely to his analysis.

As it turned out, the 2012 polls actually were biased in favor of Romney, by about 3%.

My friend has suffered no reputational damage that I know of for being wrong about 2012. I was right to assume that pollsters, whose full time job it is to forecast the election, had thought about the issue more carefully than my friend, who was probably just repeating some talking point he read on a right wing website somewhere and whose career wouldn’t be impacted no matter whether he was right or wrong.

“Just take the polling and ignore everything else,” with a few caveats, is basically the philosophy of FiveThirtyEight. Like individual pollsters, Nate Silver’s website has skin in the game, because it gives you probabilities over enough elections to judge them based on how they did (someone who “called 2016” would’ve had a 50% chance of being right just by guessing!). Reviewing its own performance, FiveThirtyEight finds that they do a pretty good job, meaning that, for example, when they say a candidate has a 70% chance of winning, that person actually wins something close to 70% of the time. Here’s how they judged Senate races in 2016.

Normally, you would be right to be suspicious of a website checking its own work. But you can be sure that Silver is famous enough, and if he was wrong about this, it would be very easy for someone to make a fool of him. But nobody has, which is a reason to trust the analysis.

The successes of polling should be seen as making a strong case for relying on mechanisms such as prediction markets and forecasting tournaments to understand the world, as implied by the research of scholars like Robin Hanson and Phil Tetlock. Instead of trying to forecast events from the ground up, a better path to understanding is to simply give people an incentive, repetitional or financial, to get something right, and trust what the aggregate results of their predictions tell you. Those that have proven themselves under such criteria deserve to be listened to more than those who have not.

When Fox News and the NYT Agree

It is clear that on most politically salient issues, partisanship drives mass opinion. To take one of a million possible examples, Democrats take COVID much more seriously than Republicans.

I could find you similar charts on the economy, crime, the causes of racial disparities, or any other topic you can think of. These divisions are driven by information consumption, as people follow and trust news sources that have the same political leanings as themselves.

While the nature of partisanship is well known, we have largely ignored that there is something on which right wing and left wing sources are in agreement. In its last poll, Fox News has Biden + 10. As of this writing, that’s the same as the Biden + 9.6 average of all polls, most of which are conducted by left leaning media organizations or universities.

The conservative Wall Street Journal and the liberal NBC News actually do their polls together, and their latest has Biden + 14. Rasmussen, as mentioned before, disagrees with other pollsters on Trump’s approval rating, but now has Biden + 12 nationally.

Such is the power of skin in the game. If you were to ask pundits from different media organizations to predict, say, what will be the results of economic sanctions on Iran, or the impact of the BLM movement on crime rates, they would differ, with representatives of Fox News, Rasmussen, and The Wall Street Journal predictably on one side, and those from media organizations like NBC, The New York Times, and The Washington Post on the other.

This has important implications. It’s not that we disagree because a lot of issues are hard. Sometimes they are, but other times reasonable minds should converge. I’m sure that if the NYT and Fox News had to make forecasts about the impact of say, the Affordable Care Act, or how much the temperature of the earth will increase in the coming decades, they would be a lot closer than the punditry and reporting of each side would indicate.

The lesson of polling convergence is in a sense an optimistic one. Many of our disagreements are not the result of our brains being wired differently, or because it is impossible to turn data into predictions. They result from misaligned incentives. Take democracy, where as Bryan Caplan points out in The Myth of the Rational Voter, the probability of your vote changing the outcome of an election is zero, so there is no incentive to choose wisely. Leaders know the public is irrational and tribal, so they focus on spin, satisfying their base, and PR over actual results. Much has been made over the fact that those who pushed for the Iraq War continue to work as respected media pundits and scholars today, with many of them being promoted by left-wing outlets like MSNBC for their criticism of President Trump. If we had a system to select pundits based on objective results, instead of a partisan media catering to various tribes of an ignorant public, no one would have ever heard a word from Bill Kristol, Paul Wolfowitz, or Doug Feith after around 2004.

Despite differences between Republicans and Democrats in how seriously they take COVID-19, the gaps in behavior are actually much smaller than you might expect.

While Democrats are 30-40% more likely to say that COVID is a major threat, they are only 4% more likely to wash their hands more often, 13% more likely to wear a mask, 6% more likely to stay home for work, and 2% more likely to urge friends and family to protect themselves. The largest partisan gap is in purchasing extra supplies of food, at 19%, still substantially less than the difference in how seriously each side says it takes the disease. Partisanship mostly disappears when there is a connection between the behavior of individuals and the outcomes of their actions. How you vote (as an individual that is), or how seriously you say you take something, has no real world influence, so people indulge in partisan passions. When it comes to behavior, conservatives and liberals act mostly the same. (Update, 10/11/20: A poll came to my attention after writing this showing massive differences between Republicans and Democrats in COVID related behavior. I’m unsure how this is consistent with the YouGov poll above from late April, as the issue was already partisan then, but would guess it has something to do with people’s brains being more taken over by partisanship the closer we get to the election. The point in the two paragraphs above therefore appears not to be as strong for individuals acting with limited information, assuming that they are answering questions about behavior honestly, while the argument about better informed institutions being able to rationally respond to incentives remains.)

The technologies exist for rational decision making, we just have to find ways to promote and use them, and select elites into positions of power based on a proven track record of getting things right. In a culture with a healthy epistemological climate, every heated debate would end with the sides agreeing to make a bet, and giving an opinion without being willing to do so would be seen as something dishonorable. Newspapers and cable news channels as institutions, and individual pundits, would cover debates about the effects of policies related to healthcare, taxes and crime the same way media companies deal with election polling, which is to produce probabilistic forecasts spelling out precisely what they think will happen, given a policy choice.

Unfortunately, we do not live in a healthy epistemological culture. Here’s what Phil Tetlock wrote in his book Expert Political Judgment.

The radical skeptics’ assault on the ivory-tower citadels of expertise inflicted significant, if not irreparable, reputational damage. Most experts found it ego deflating to be revealed as having no more forecasting skill than dilettantes and less skill than simple extrapolation algorithms. These are not results one wants disseminated if one’s goal is media acclaim or lucrative consulting contracts, or even just a bit of deference from colleagues to one’s cocktail-party kibitzing on current events. Too many people will have the same reaction as one take-no-prisoners skeptic: “Insisting on anonymity was the only farsighted thing those characters did.” (emphasis added)

Tetlock goes on to point out that not all is hopeless, however, as some experts did do substantially better than others, and figuring out who these people were and what strategies they used was the subject of his follow-up book Superforecasters with Dan Gardner.

Creating the right incentive structures for more rational decision making should be the goal of all intelligent people, no matter their political preferences. As the case of scientific polling shows, objective standards and skin in the game can be the answers to irrationality and partisanship. The technology is already developed, the hard part is giving it a more prominent role to play in institutions like academia and the media.

So Who Will Win?

As of this writing, FiveThirtyEight gives Biden an 85% chance of winning the election. This will be easy to accept if you are a Democrat, and harder to accept if you are a Republican, and you are probably telling yourselves things like “shy Trump voter” and “what about 2016” and “they didn’t sample enough Republicans.” But trust me, the pollsters have considered these possibilities, thought about them more carefully than you have, and their reputations are on the line, while yours is not (if you place money on it, you deserve to be taken more seriously).

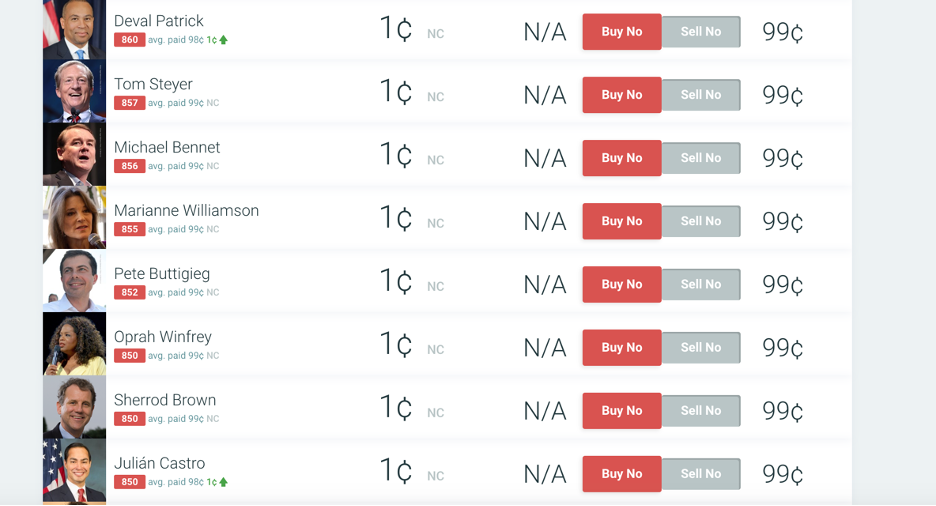

For my part, I bought 3,161 shares of Biden-Yes for winning the 2020 nomination at an average of 26 cents. At the time I also bet on him to win the presidency at a lower pice. I later put less money into Bernie after he surged, but then sold them off later. I bet no on everyone else, except for Klobuchar, who I put a small investment in. Here’s a screenshot from sometime late in the process.

Most people I knew throughout the primaries were saying there was no way it would be Biden. He was too old, too white, too senile, not woke enough. He didn’t seem to have enthusiastic supporters on Twitter. I ignored all that and listened to the polls, assuming that the guy who was leading them in the entire year running up to the election was likely to win, and the second most likely to win was the guy who was second in the polls, that is Sanders. The betting markets currently have Biden at 66% to win the general, which is substantially less than the FiveThirtyEight model. I think the latter is closer to the truth, which is why I’m holding my Biden shares, but if you disagree you can potentially triple your money.

As mentioned above, the Real Clear Politics average as of this writing is Biden + 9.6. If the polls are anywhere close to accurate and they do not tighten, the electoral college is likely out of reach for Trump.

In the coming weeks, you’ll see many who will go out of their way to get around what the polls say. Just the other day, a survey was released showing that Trump voters were more likely to say that they would hide their views from a telephone pollster. Yet if this was useful information, we would expect Trump to be doing better in online polls than he does in telephone polls, and we do not find that. You will see a hundred more of these feeble attempts to tell you why the polls are wrong in the coming month, and you should ignore all of them.

Of course, the polls can be wrong. My argument is that they’re very unlikely to be wrong in a way some random analyst or partisan can predict better than pollsters themselves. Trust objective standards and skin in the game more than you do wishful thinking.

Polls are not perfect, but they can be trusted, showing a good historical track record and the ability to overcome partisan differences. The question is how we can make our larger scientific and political culture more like election polling, and less like accountability free scholarship and punditry.