Many smart people believe AI will eventually kill us. In the short run, however, it’s made me a lot more productive.

I would estimate that the gains are so large that, when I’m not preoccupied with a book project or have something else going on, I’m probably producing an extra article a week, going from approximately three to four, for a productivity gain of 33%. And the articles are probably a lot better than they otherwise would be in a variety of ways, down to having fewer typos. Extrapolate this to the entire economy, and AI should be making us a lot wealthier.

Of course, you wouldn’t do that, since AI is most ideally suited to help with work like my own, which involves gathering, processing, and synthesizing information that is in text form. So maybe a 33% productivity boost is the upper bound of what’s possible right now, but even if it’s a fraction of that in most industries the potential gains should be massive.

First of all, there is data analysis and the production of charts and figures. Take my recent article on partisanship and crime. Each figure included in the piece would have taken hours to produce and tweak just a few years ago. In graduate school, we would study programming languages like R and Stata in order to do something as simple as produce a bar graph or a chart with an x- and y-axis. You might use Excel too. If you needed to label the data points, change the display of an axis, draw a regression line, etc., each of these would be a new command (or in the case of Stata or Excel, clicking through menu options). The programming wasn’t hard, but you needed to figure it out, and it was easy to forget the lessons. So if I would make a certain kind of chart every six months, each time I began a new project there was a learning curve.

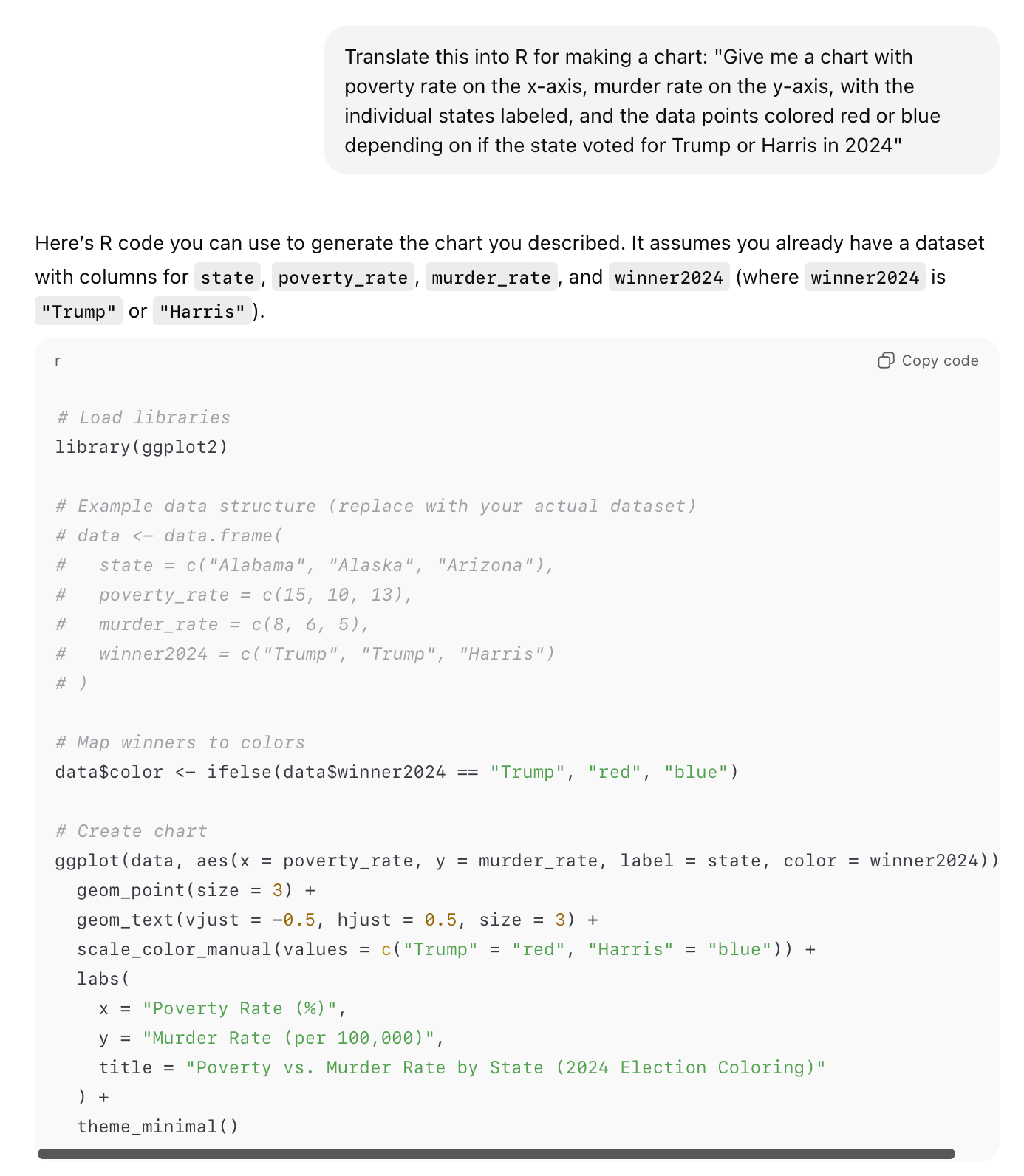

With AI, you just upload the Excel sheet and literally type out what you want it to do. I might say “Give me a chart with poverty rate on the x-axis, murder rate on the y-axis, with the individual states labeled, and the data points colored red or blue depending on if the state voted for Trump or Harris in 2024.” It pops out like magic.

When I fed the instructions above into ChatGPT and asked it to translate into R, here’s what I got.

Again, there’s nothing particularly hard here, but the issue is the constant learning and relearning. You have to figure out exactly where the dollar signs go and make sure all your commas and quotation marks are in the right place. And if something didn’t look right once a figure was produced, a lot of time might be spent figuring out how to fix it. ChatGPT sometimes makes mistakes too. When I wrote my crime article, it was confused about who exactly won Georgia in 2024, and I had to tell it to doublecheck. But the process of fixing mistakes is obviously much easier.

Coding in order to do statistical analysis and create figures is grunt work. When I had research funds, I would sometimes just hire someone and tell them the kind of figures I wanted made. It would take days or weeks for them to get back to me, but now I have a much faster research assistant who understands instructions better than any human and completes tasks in seconds. Also, I remember there was this one time when I hired a guy who had his pronouns in his email signature, and he complained that I was too curt in my messages to him. He was hurt and actually wrote something like “I’m not a machine, where you just say what you want and expect an output.” I’m not sure exactly what this sensitive young man was looking for in our relationship, but all I wanted was my figures and it was annoying to be given the task of also managing his inner emotional life. Anyway, social scientists no longer need to be coders, and what once made up a large portion of graduate study in fields like economics and political science can now be directed towards other purposes.

ChatGPT is also great for doing more efficient research. Another form of grunt work is having to come up with things like exact dates. To take one example from my forthcoming book, I have a brief section on the Arab Spring. In order to narrate the sequence of events to set the background for making my broader point, in the old days I would have needed to look up individual facts. So without AI, I would have looked at Wikipedia or Googled phrases like “When did Mubarak resign?”, “When did protests in Libya start?”, “How many Arab countries experienced protests or civil strife in 2011?”, etc. Having gathered each individual piece of information, I could put together an account that tied them all together.

With ChatGPT, I simply asked it to give me a timeline of the Arab Spring, and include the specific events I wanted highlighted. Perhaps something like this could have been found through Google before, but it would have taken extra effort to locate it, and it was unlikely that any timeline would have each event that I thought was important and wanted to include. Of course I knew the general timeline of what happened during the Arab Spring. First there were protests and the overthrow of the government in Tunisia, then the same in Egypt, then protests started in Libya and Syria, and Libya had a civil war that eventually led to Gaddafi’s death. But when you’re writing a narrative, you need to be exact, and know stuff like whether Mubarak resigned before or after protests in Libya started, or the exact month Gadhafi died. This is the kind of information you can just ask ChatGPT about, instead of taking the time to put the pieces together yourself.

As with data analysis and creating figures, ChatGPT in helping to gather information automates the boring aspects of being a writer while keeping the more meaningful and fulfilling parts.

AI is also a great tool to learn about new subjects. I recently decided I needed to study more macroeconomics. Two recent books I’ve been reading are Mankiw’s Principles of Macroeconomics and Scott Sumner’s The Money Illusion. When there’s a concept in either of these books I’m having trouble fully grasping, I will simply take a screenshot of a page or two, put it into ChatGPT, and ask for an explanation. Practically every time, it does a better job than the author, and I can of course ask follow-up questions. I just noticed a feature where you can highlight a section of ChatGPT’s response and request further elaboration.

This raises the question of whether we need the books at all. Especially in the case of Mankiw, which is a textbook and therefore covers basic concepts, I think it would’ve been a lot more efficient to just take the chapter titles and headings and ask ChatGPT to explain the concepts to me. The only reason I didn’t was that I wanted to be able to say I learned macroeconomics from textbooks instead of ChatGPT. The former seems like something a serious person would do, while the latter feels like cheating.

But this is actually quite silly. ChatGPT is a better way to learn the information! A textbook sums up the knowledge produced and the ongoing debates in a field – the types of work LLMs do better than humans. With Sumner’s book, in contrast, you are getting a custom take on major debates, so maybe the same logic doesn’t apply and you need to engage with the author’s words themselves. Even here, ChatGPT can often explain concepts better than the author, though you still perhaps need the human to make the original arguments in the first place. Unlike a book, the fact that you can ask AI questions means that you can quickly pick up the background knowledge and greater context necessary for a full understanding of ideas.

Sometimes, I’ll just be in constant dialogue with ChatGPT about a topic that is an interest of mine. For example, I get annoyed hearing people point to China as showing that industrial policy works. Someone once told me that China’s growth started slowing down at a lower level of wealth than Singapore, Taiwan, and South Korea. Was this actually true? Instead of going back and gathering all the data, I simply asked ChatGPT, and it informed me that this was indeed the case. To make sure the information it tells me is correct, I’ll often take the output, put it into a new chat, and then ask for a factcheck. By building up my store of knowledge, I’m able to make connections for ideas that will be used in future articles and projects.

ChatGPT is great for learning about a debate in an academic field of study. Before, you would need to read the arguments of one side, then the other, and try to find a way to synthesize different sources of information. Often, nobody will have ever addressed a question about a debate in the exact way I would have framed it, or if they did it would be too hard to find the relevant article. Moreover, ChatGPT’s desire to be helpful means that it tailors its output to give the user exactly what he wants. Academics, in contrast, will often write in order to make their work less accessible, for example by following the norms of their field in communicating information even if those norms make it more difficult for readers to understand them.

Before, if there was something that confused me about a paper or I had a question about the background knowledge required, I would simply email the author and wait for a response. Much of the time they wouldn’t get back to me. Now, of course, I simply ask ChatGPT, which, as we’ve seen, usually explains people’s ideas better than they themselves can.

Finally, AI helps me with basic fact checking, spelling, and grammar. This requires no more than copy and pasting articles into ChatGPT and giving it instructions. Spellchecking is one of those very simple tasks it really struggled with only a year or two ago, now being at least as good as something like Microsoft Word.

In addition to helping me write more articles, the book I just finished only took about six months from beginning to handing in the first draft. I would look up how long my previous books took, but that would take some digging into my records, and the opportunity cost of doing so is too high because I could otherwise be learning more important things with ChatGPT. Maybe one day it’ll have access to all my emails and file histories and I’ll be able to answer such questions with a simple query.

I’ve already said we probably don’t need textbooks anymore, even though they’ll probably stick around anyway due to vested interests. I assume that the academics listed as authors will be using ChatGPT. But am I even necessary? It seems unlikely that there won’t be a point very soon when you couldn’t just train an AI model on my body of work, and have it produce takes and even long articles and books that reflect my views. When I’ve experimented with this and asked ChatGPT to write something reflecting my opinions and in my voice, it seems to get my views wrong, making them for example much more normie. The tone and style it uses seem to reflect the articles I’ve produced for websites, magazines, and newspapers rather than the newsletter.

Maybe it’s just about getting enough of the training data in there. Or maybe not, and ChatGPT plateaus at about the level where it can impersonate a midlevel writer but not a great one. This would do wonderful things for my ego, as it would suggest I’m working at a fundamentally higher level than others who write for a living. I feel that way now, but it would be good to have the entire trajectory of the most innovative technology of our time confirm it.

Agree with most of this, but I would just say that ChatGPT still has to be double and triple checked for contentious issues. For example, I recently asked ChatGPT about the top 10 deadliest wars since World War II, and the current war in Gaza kept coming up, only for me to have to keep going to Wikipedia and tell ChatGPT that "certain war x since WWII has killed way more people," and for it to keep updating the ranking, eventually admitting that the Gaza War wasn't even in the top 35. I have no doubt that it'll eventually improve, but this will probably always be something to watch for.

>This raises the question of whether we need the books at all. Especially in the case of Mankiw, which is a textbook and therefore covers basic concepts, I think it would’ve been a lot more efficient to just take the chapter titles and headings and ask ChatGPT to explain the concepts to me.

This use case is what worries me most as things stand now. With the propensity of LLMs to generate bullshit, it seems dangerous to use them as a teacher of unfamiliar subjects. Sure, probably not a big deal for just feeding your curiosity for entertainment purposes. But when you are relying on it to inform your work as a public intellectual, there could be significant consequences.

Edit: Just wanted to add that Richard may well have sufficient background to identify incorrect about macroeconomics. I was using this as an example, not intending to make a particular accusation.