It was with great interest that I read Leopold Aschenbrenner’s short book (manifesto?) Situational Awareness. On the ultimate question of whether we will all die, Leopold is about midway between Doomers and e/acc types. This means he thinks that the alignment problem is real but can potentially be solved. One of the unique contributions of Leopold’s work in this space is thinking about the geopolitical implications of AI. He believes that we are in a race with China. As with the early days of the atom bomb, it is vital that the free world maintain a lead over its adversaries on the most important military technology of the age.

Some of his suggestions about the need for better security at AI labs seem like low hanging fruit. Yet Leopold’s argument is much broader. He doesn’t just think that AI has national security implications, but wants us to see it primarily as a military issue:

Simply put, it will become clear that the development of AGI will fall in a category more like nukes than the internet. Yes, of course it’ll be dual-use—but nuclear technology was dual-use too. The civilian applications will have their time. But in the fog of the AGI endgame, for better or for worse, national security will be the primary backdrop.

Leopold predicts that superintelligence will be here by about 2027. Let’s assume that he is correct on this point, and also that superintelligence can be effectively used for military purposes to give one society an edge over others. The alternative to getting serious about security is preparing for a world in which Chinese espionage closes the gap with the US and, because China is good at mobilizing resources and making incremental tech improvements, it eventually comes to dominate the world.

Leopold assumes that China will steal the technology, and that it will put a lot of time and effort into AI and using it towards geopolitical ends. The Chinese state must be competent enough to recognize the potential of AI, and direct resources towards developing and deploying the technology.

What basis is there for believing this? Leopold:

I, for one, think we need to operate under the assumption that we will face a full-throated Chinese AGI effort. As every year we get dramatic leaps in AI capability, as we start seeing early automation of software engineers, as AI revenue explodes and we start seeing $10T valuations and trillion-dollar cluster buildouts, as a broader consensus starts to form that we are on the cusp of AGI—the CCP will take note. Much as I expect these leaps to wake up the USG to AGI, I would expect it to wake up the CCP to AGI—and to wake up to what being behind on AGI would mean for their national power.

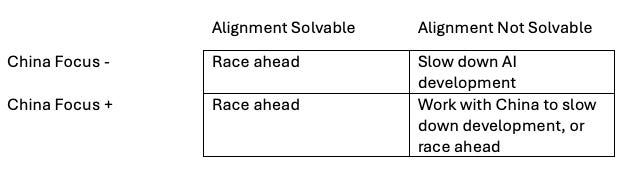

To me, there seems to be a 2x2 matrix of different views one can hold, again assuming that we are on a short timeline. By “alignment solvable” I mean that it is solvable while we are moving quickly towards AGI within the next few years, not whether it is theoretically solvable with unlimited time and resources.

Leopold is in the bottom left quadrant. The implication is that we should not only lock up the labs, but race ahead, because we have to beat them. Yet I don’t think his argument actually depends on China being smart, because if it is clueless about AI, then why would we want to slow down this technology that could potentially bring so many benefits to humanity?

If alignment is not immediately solvable, then perhaps what we should do is slow this train down. In a world where China won’t care or think about AI, this is something we can undertake ourselves. The scariest world is in the bottom right. It would require careful thought about whether some kind of arrangement with the CCP is possible. If not, then we are off to the races to at best be the first ones to develop a likely unaligned AI. China might win, and even if we “win,” that may doom humanity anyway.

I think that one thing that is clear is that China focusing on AI makes things worse no matter what we think about alignment. If alignment is difficult, we’d rather be in a world where the US alone can put the brakes on the technology, rather than try the more difficult task of coordinating globally, or going off to the races to try and be the first to develop an unaligned AI, which would obviously be a disaster.

I think, however, that the danger in Leopold’s entire exercise is it might itself make China focus on AI. Dwarkesh Patel actually asks him about this in their recent podcast (see 3:55 here), and Leopold acknowledges that this is a tough tradeoff, but he just hopes more Americans than Chinese will read his work.

I think that this is a safe bet. The problem though is that the Chinese tend to be very influenced by Western ideas. One can look back at the history of the PRC since its founding to see this. Communism itself was of course an idea imported from the West. When Chinese students protested at Tiananmen Square, they called for Western-style democracy. After the disaster of Maoism, China eventually opened up its economy along the lines suggested by European and American experts. The one-child policy through which China shot itself in the foot and led to the great fertility crisis it faces today was inspired by alarmist ideas about population growth that were originally promoted by groups like the Club of Rome.

While at the University of Chicago, I once took a class with John Mearsheimer in which he mentioned that he sometimes went to China and would tell them that although they are nice people, his theory of international relations says that conflict between their countries is practically inevitable. I found this disturbing, as it seems to be the Platonic ideal of a self-fulfilling prophecy. If the Chinese are listening to Mearsheimer, it increases the odds that his theories will be proved correct. There are many ways to get into an arms race or begin a cycle of aggression, but the surest way of doing these things is through both sides thinking such outcomes are inevitable.

Right now, the West does not think of AI primarily as a national security issue. I haven’t seen much evidence to indicate that China does so either. And if other kinds of technologies give us anything to go by, it may if anything use government to hinder its development through regulation.

There’s an analogy here to biotech. Supporters of genetic engineering and embryo selection have sometimes argued that China is soon going to be creating super babies and so will eventually take over the world. Supposedly, it is our Christian morality and woke ethics that prevent us from realizing all the benefits of such practices, but a smart and pragmatic people like the Chinese can’t be expected to make the same mistake. Supporters of genetic enhancement are like Leopold in the sense that they are assuming the Chinese can be expected to see the world as they do and have similar beliefs about what is important.

As things stand, however, China is more restrictive than the US on biotech policy. Surrogacy is not technically illegal but in practice it is treated as a crime. When He Jiankui became the first scientist to use CRISPR to create a genetically edited baby, he wasn’t celebrated by the authorities for helping usher in a new era of Chinese genetic superiority and world domination. Instead, after an uproar among ethicists in the West, he was sentenced to three years in prison. Interestingly, He’s foreign collaborators in the study have not faced any professional consequences, much less prison. Steve Hsu has talked about how in 2010 when he tried to sequence the genomes of high IQ Chinese kids, he couldn’t get permission to do so even with the consent of their parents. Meanwhile, in the US, you can engage in embryo selection and hire surrogates to your heart’s content. Private sector DNA sequencing is basically unregulated, whether for adults, children, or fetuses. Policy is not as libertarian in Europe, but even there things are not nearly as bad as in China.

I think it’s safe to say that we are not on the cusp of a crash program to make a race of Chinese super babies. But why not? They don’t have Christianity and they don’t have woke, so you think they would rationally see the upsides for individuals and their country. I think the answer here, and this applies to other states too, is that China simply isn’t all that rational, in the sense of being a nation that seeks unified goals rather than a system that goes off of inertia and vibes. Political scientists talk about the difference between actors applying a “logic of consequences” versus a “logic of appropriateness.” Rational choice theory as applied to states assumes the former. China wants to be powerful and succeed, and so will look for whatever tools it might have at its disposal. Yet individuals in government commonly apply a logic of appropriateness, in which the identity of an actor along with cues from similarly situated individuals determine the decisions he makes. So when there’s a pandemic, a logic of consequences would predict that a public health official would carefully consider the best ways to stop the disease. A logic of appropriateness would predict that the same individual will primarily look to how individuals they consider their peers have handled the same problem. From what we saw from public health officials the world over during Covid-19, come to your own judgment about which theory better explains how the pandemic unfolded.

The reason China is not trying to CRISPR and embryo select its way to world domination, then, is because no one else is doing so. They have a general conservatism within their culture, in which their first instinct towards anything strange and seemingly unnatural is to restrict or ban it. Had Western nations twenty years ago started to talk about the national security implications of genetic enhancement, the Chinese would’ve probably woken up and seen the potential benefits of such technology. Instead, we’ve basically kept government out of private decisions regarding issues like IVF and ignored any potential national security implications down the line. This is true even though increasing the birth rate and health and intelligence of the population is bound to eventually have geopolitical implications.

Note that governments following a logic of appropriateness means China can influence the United States too. Some have argued that lockdowns like the ones we experienced in 2020 would have previously been seen as unthinkable in the West, but for the fact that the disease started in China, where they care less about civil liberties. This meant that adopting similar policies in other countries didn’t appear as extreme. That strikes me as plausible, but much more often ideas flow from West to East.

I’m sure there are some people in China arguing that AI should mainly be seen as a national security issue, and parts of the government will at least give lip service to the idea. Government bureaucracies will commission and publish reports on the subject, like they do on a lot of topics, if they haven’t already. The question is whether China would, absent cues from the US, make this one of its main priorities. If you believe that superintelligence will be here in the next two or three years, there just isn’t that much time for China to wake up and undertake a crash program. They’ve slept this long, why not believe they can sleep a little longer? Leopold seems to assume that there will be a moment when events necessarily snap them out of their stupor, but by then it could be too late.

When it comes to AI, I think biotech is a better analogy than nuclear weapons. The latter were originally created for military purposes in the midst of a war. In contrast, AI and different forms of biotech have started out in the private sector as technologies designed for commercial purposes. Of course, the same could be said about airplanes, and they were quickly adapted for war. But again, short AI timelines means that all of this has to happen much more quickly than with previous innovations. Leopold’s timeline does not allow for decades of delay as both the US and China integrate AI technology into their military plans and strategic thinking. With longer timelines, we need a different analysis.

As with China, I think Leopold also overestimates the US government’s rationality and ability to move towards a clear goal. He argues that when Washington realizes how powerful AI is, our leaders are not going to leave it in the hands of private corporations. But why not? One could’ve said the same thing about the internet at its inception. Imagine how powerful something like Google would have seemed before it came into existence. Were we to believe that Washington would let a private corporation become the main curator of information for the entire country, and along with other tech giants, surpass the government in ability to monitor and manipulate mass sentiment? The internet has remained relatively free because American institutions and traditions tend to default towards leaving decision-making in the hands of the private sector. This is due to ideology, but perhaps more so to us having a system with many veto points designed to make it difficult for the federal government to take drastic action absent widespread consensus on what is to be done. Remember that one of our two major political parties sees small government as an end in itself, and the other also cares somewhat about civil liberties.

If I’m correct about all of this, then Leopold’s reasoning in hoping that a lot more Americans than Chinese read him has a fatal flaw. The danger isn’t that Xi Jinping reads Leopold; it’s that Leopold has enough influence in the US that the idea of AI as primarily a national security question becomes an assumption of policy makers, in which case China starts thinking similarly for memetic reasons. And once the Chinese giant is awakened, it brings to the AI arms race all the strengths that Leopold has written about, including a large population with a lot of STEM talent and the ability to centrally direct resources towards a clear goal.

Perhaps the best possible outcome is to quietly implement security protocols at AI labs just in case China undertakes a crash AI program involving espionage, while not making too big of a deal about it and hoping that this doesn’t make clear to them that the US government is thinking about this issue in national security terms.

This should probably be done to a certain extent. Yet there are also potential benefits to a more actively cooperative stance. Given my model of China, I would be much more bullish than most people regarding the potential for us to come to some kind of agreement with them on slowing down AI research, if we decided that was the best thing to do. But if Leopold is right about the shortness of AI timelines and alignment being solvable before we all die, one could argue we should not speak about this as a matter of national security and hope the Chinese continue to focus their attention on more important issues like keeping sissy men off TV.

I listened to the whole Dwarkesh podcast, and I could not get over Leopold’s apparent immaturity.

He may be brilliant, but the most dramatic events in his life so far include failing to notice that his patron (SBF) was a colossal fraud, then getting fired from OpenAI, mostly because he had incredibly naive views about how organizations and power work. Both of these reveal a gross lack of judgment.

In the podcast and in the manifesto, which I’ve only skimmed, he purports to be an expert in a dizzying array of fields, including history, politics, military strategy, espionage, counter-espionage, AI, ethics, and economics, to name just a few. It is rare for such a young person to have mature judgments about topics like history and politics, where great work is normally done by much older practitioners and scholars.

So overall, while I worry about AI and China, I cannot put much faith in Achenbrenner.

He strikes me as a dorm-room genius and a TechBro.

Time will tell I suppose, but his track record is already very bad.

Yet another article about “AI” that predicts it will be magically all-powerful and control the world but doesn’t say anything about why or how this miracle will occur, or why LLMs are going to be epochally more transformative than other forms of algorithmic automation.