Why the Technocapital Machine is Stronger than DEI

Affirmative action won't cause planes to fall out of the sky

There has recently been a freakout on the right about DEI in the airline industry. The narrative goes that planes will soon be falling out of the sky due to attempts to diversify the workforces among pilots and air traffic controllers. Steve Sailer has written about this, Amy Wax mentioned it in our recent conversation, it has become a staple of Fox News, and Elon Musk has gotten in on the action, for which leftists have denounced him as a practitioner of “race science.”

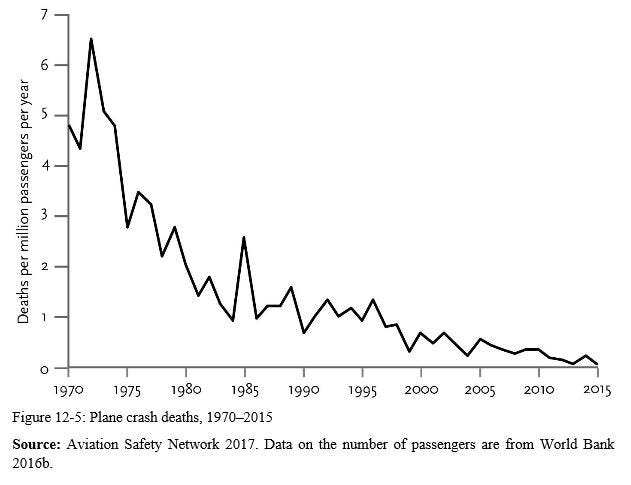

It took Anatoly Karlin to inject a bit of sense into the discussion, pointing out that flying is safer than ever.

I think this discourse demonstrates the limits of anti-wokeness. It goes against my interest to say this, as it may make you less likely to buy my book on the topic, but I think that conservatives in many ways exaggerate the impact of race and gender issues, which leads them to focus too much on DEI and ignore topics that are potentially much more important.

I don’t doubt that American institutions are pushing for less competent people to get jobs they don’t otherwise deserve in important fields, and that this has costs. Nonetheless, there is still little evidence that the world is falling apart, and equity initiatives are far from the only ways in which our society deviates from sound policy based in meritocratic principles. One would think that if you predict that planes will fall out of the sky soon due to DEI, and it doesn’t happen, you would step back and wonder what is lacking in your model of the world. In this case, I think that there is something off about how the right thinks about the concept of merit, putting too much emphasis on top-down diktats and not enough on the necessity of decentralized processes designed to aggregate and share information. The case of the missing airline crashes serves as a useful tool to demonstrate this point.

Markets Keep Planes in the Sky

When Karlin argues the “technocapital machine is stronger than DEI,” he gets at the idea that people who spend too much time focused on wokeness tend to drastically underrate Western institutions. The main reason that planes don’t crash as much as they used to is because, as trite as this sounds, airlines have very strong incentives not to kill their customers and crews. They have much more information about their employees and what is good or bad for their business than anyone else does watching from the outside, and it is in their best interest to get airline safety right. Markets drive people to give their money to the companies that best balance safety and convenience, along with whatever else customers value, and employers work hard to avoid hiring individuals who can’t satisfactorily do their jobs. In addition to this, we have our governing and legal institutions, which include the direct oversight of airline safety, and also allow lawsuits against individuals and entities that cause harms through gross negligence.

For this reason, I’ve never worried too much about “woke capital.” I believe wokeness is irrational, and markets are the way we foster and encourage rational behavior. If one sees private businesses doing things that seem stupid like hiring more incompetent minorities than they should, I look first for an explanation based in government mandates, and if there isn’t one, then that opens up other possibilities, like maybe the jobs that the less qualified people are getting don’t matter all that much, or companies don’t actually follow through on the DEI initiatives they promise. Markets are ruthless, constantly rewarding competence, convenience, cost effective measures, and overall providing the best experience for the consumer, who is mostly unsentimental and trying to maximize his own well-being. Given political attitudes among upper class professionals, it’s unsurprising that corporations sometimes go beyond what the law requires on DEI issues, but when they do it’s more likely that they’re engaging in mindless virtue signalling than doing anything that harms the bottom line.

To take one example, during and after the 2020 race protests, the top 50 US corporations pledged $50 billion to racial justice causes. As it turned out, around 90% of it went to loans or investments they hoped to profit from. Many corporate pledges were simply never followed up on. Of the money promised to the cause as of October 2022, 93% came from finance, which employs fewer black people than almost any other industry. Indeed, conservatives often criticize corporations for being hypocrites on identity issues, but what’s the alternative? That they take inclusion seriously to the point that it hurts the bottom line? Left-wing race hustlers are dumb, and we are lucky they can be satisfied with cheap talk, and a bit of balancing in terms of hiring. This is not an ideal state of affairs, but there are much worse ways to run society, say by government working to “protect jobs” and therefore bringing progress to a grinding halt.

By underestimating the brilliance of well-functioning markets, anti-wokes make a mistake that is similar to one more commonly associated with wokes. According to leftists, capitalists engage in discrimination by doing things like denying blacks loans they should otherwise get, or not hiring them for jobs that they’re qualified for. If this were true, the answer would be to start your own business that only lends to and hires blacks, and seeing how things go. Of course there isn’t much discrimination in markets, because people are too selfish. Even literal Nazis wouldn’t stop doing business with Jews. Conservatives tend to understand this when they’re arguing against the gender pay gap, but then believe that airlines are about to let planes fall out of the sky and kill their employees and customers because they’re so desperate to have fewer white males in the cockpit.

Human beings operating in a market environment are not omnipotent. They often do stupid things, and can of course always operate according to prejudice or ideological conviction. But markets are by far the best tool we have to place limits on the harms done by false ideas and irrational impulses, and ultimately weed them out.

Two Reasons to Be Anti-Woke

All of this gets to the idea that one can divide anti-wokes into two different camps. Some people dislike wokeness mainly because it discriminates against certain groups, with institutions disfavoring whites, Asians, and men. Others, including myself, see the problem of wokeness as mostly being yet another case of government interfering with markets.

The importance of this distinction became clear to me when I read an article by some anti-woke writer arguing that the way to fight cancel culture was stronger labor unions, so employers couldn’t easily fire workers without good cause. To me this misses the entire point of why wokeness is bad in the first place. In general, employers are the people who have the information and incentives to understand whom they should hire or fire. Civil rights law comes along and claims to know that a certain percentage of the workforce should be black females, and then punishes companies if they fall short. Organized labor involves government forcing businesses to negotiate with supposed representatives of their workforce, which always leads to locking in existing employees and discriminating against people looking for a new job, while rewarding seniority instead of merit. The ability to hire and fire is fundamental to the process of information amalgamation that makes the economy work, in labor just as in other markets. To take away that right because some employers might commit injustices with it is to deal with a relatively small problem by striking at the heart of the institutions and processes that have created a high standard of living across the West, and especially the United States.

Whether you oppose wokeness mainly because it’s anti-market or anti-white male has implications for what you focus on. Voluntary programs to increase diversity, to the extent that they’re not forced upon institutions by the government, are unlikely to matter much. Air traffic controllers are federal employees, so there might be more to worry about there than with pilots, though even here I trust that those who run the federal government think it would be bad for them if planes were crashing all the time. People seem much more willing to accept government incompetence inconveniencing them, as with the TSA, so it’s possible DEI leads to some harms in that area. But pilots will generally be fine, because they work for private corporations subject to price signals.

The ability of markets to place limits on what equity advocates want is why wokeness tends to be at its worst in university settings. Airlines, in contrast, have to actually attract customers who pay for what they want with their own money rather than federal subsidies. Note how liberals seem to always be rhetorically winning the DEI debate, with corporations and institutions promising to “do more,” while activists nonetheless never seem to get the numbers that they want. To take one example, the percentage of physicians who are black men has basically remained unchanged for a century. Blacks are 13% of the population and only around 6% of doctors, and in some subfields of medicine they have close to zero representation. Why? All the virtue signalling in the world won’t change the fact that patients don’t want to die, hospitals don’t want to be sued out of existence due to malpractice, and coworkers don’t want to have to pick up the slack for incompetent doctors. The Killer King hospital is a good example of what happens when diversity ideology meets reality. Perhaps the 6% figure for black doctors is too high, and it should be 2% or so under a completely meritocratic system. Regardless, market forces and generally non-corrupt institutions place limits on how much harm DEI can do. I think that medical schools are less subject to market forces than the practice of medicine, though even that gets further away from its principles than most fields and industries, so I’m less frightened by them lowering their standards than a lot of people are.

Note that even though medical schools seem to be admitting a lot more blacks than before, they tend to drop out at much higher rates and when they become doctors they are more likely to get investigated and disciplined by medical boards, likely causing more of them to either leave the profession or take on patients with less difficult problems. In other words, there are plenty of chances for the field to correct the mistakes made by medical schools when they grant racial preferences.

Last year, Palladium published an article titled “Complex Systems Won’t Survive the Competence Crisis,” which has received a lot of attention and I think is representative of a genre that is too pessimistic about American society and puts the blame for the failures that do exist on the wrong things. One subheading of the piece assures us that “the American system is cracking,” pointing to DEI of course, while providing little in the way of evidence backing up this statement, except in the form of a few anecdotes, and without even token attempts to prove that failures are becoming more common or establish a direct connection between the ones that have occurred and identity-based preferences. The author presents no quantitative data on declining American competence in the areas he writes about, mainly business, medicine, and airline safety. What I would consider some of the most obvious failures of American society of the last several decades – urban dysfunction, higher costs of housing and infrastructure, etc. – have little to do with diversity hiring, and generally involve other public policy mistakes like NIMBYism, NEPA review, the unwillingness to use technologies like facial recognition and DNA surveillance, and the Ninth Circuit making it nearly impossible for localities to take the homeless off the streets. Other important failures, like our low and falling birth rate, are practically universal across developed countries and therefore can’t be blamed on DEI. If one wants to argue that airline safety is getting worse, you need to show the plane crashes, and if you believe that American business is committing suicide on the altar of diversity, that should be reflected in the S&P 500 and the US falling behind the rest of the world. But those who exaggerate the impact of DEI let their preferred narrative drive the search for data, rather than approach evidence with an open mind and then go from there.

The Real Problem with Pilot Hiring

Just because planes aren’t going to fall out of the sky anytime soon doesn’t mean that there are no problems with the airline industry. But one has to do a bit of research to find out what those are, rather than simply assume they have something to do with hot-button culture war issues. In this particular case, we have if anything too much “merit” when it comes to hiring pilots. The US used to require only 250 flying hours before an individual could earn their license. After a crash in 2009 that doesn’t appear to have had anything to do with the amount of training the pilots involved had received, they upped that number to 1,500, making the US a global outlier. The former CEO of Spirit Airlines explains what happened next:

The rule has made it very expensive to decide to become a pilot — about $250,000 out of pocket and two or three years for people not trained by the military. It also has made it difficult to attract new populations, including women and minorities, into the pilot profession. Most importantly, it is seen by some as actually reducing safety since people spend years getting flight experience in areas not necessarily associated with flying commercial aircraft in a complex system, and end up entering that system unprepared…

According to the FAA, there are about 164,000 ATP licenses granted in the U.S. This includes people who can no longer legally fly commercially due to age or illness, and pilots who have not maintained medical certification. Estimates for pilots needing to be hired by the airlines for 2022 range from 12,000 to 15,000. Yet, the current rate of training is expected to produce only about 6,000 pilots this year. This means the pilot pipeline in the U.S. is producing less than half of the pilots needed to support the fleet plans of the U.S. airlines.

As would be expected when government sets an arbitrary standard, it doesn’t appear to do all that much for safety.

As importantly, these 1,500 hours can all be earned flying small, single engine planes in rural areas, or even flying hot air balloons. During the years of building these hours, most applicants do very little to train themselves in the career they plan to enter, such as flying big jets into New York and Chicago.

The largest pilot union opposes changing the 1,500-hour rule, and politicians supported by organized labor have denounced any attempts to move back towards the old standard. This makes sense, because preventing other people from being able to become pilots keeps wages up for those who already have the job. This is what unions do, as they are a way for small concentrated interests to extract value by harming the rest of society.

It’s interesting to note that the CEO of Spirit Airlines blames the 1,500-hour rule for the lack of diversity in the industry. Here we see capital using a DEI argument against labor. If you’re instinctively just anti-woke, this might turn you off and you might feel tempted to defend the 1,500-hour rule as a way to protect merit and save the jobs of the supposedly hyper-competent white male pilots who are holding the world up on their shoulders. That would be a mistake. An arbitrary standard requiring you to spend years flying hot air balloons is not the way to protect merit. I don’t mind the DEI argument in this context. It might actually be true! Some people don’t have $250,000 to spend and years to waste before they get a job, and maybe they’re disproportionately women and minorities. A belief in merit should lead one not to be obsessed with race and sex all the time, but rather to advocate for the removal of arbitrary barriers that privilege existing interests over employers, customers, and new potential workers.

What should the training requirements for pilots be? I know nothing about flying, and have no idea. It’s enough to understand that the incentives are aligned enough for markets to figure it out. Nothing prevents airlines from setting higher standards than those required by law. The fact that Canada only mandates 250 hours and we require six times as many, while flying in that country appears no less safe, implies that we should at least go back to the old standard. Maybe we can maintain some absolute minimum to make people feel safe, but there’s no reason to raise the standards to the point that they create barriers that airlines wouldn’t put there themselves, given their clear interest in not having their planes crash, which again is very bad for business.

I agree with the standard anti-woke argument that merit is a good thing. But the market is the process through which you determine true merit, through well-aligned incentives. Government fiats are a terrible way to assure that we have the best fit between jobs and workers. Of course, it’s also important to remember that merit is never the only consideration. You don’t want 160 IQ people to be street sweepers, even if they would do the best job at it, because society could make better use of their talents elsewhere. Only markets can balance merit with concerns relating to opportunity costs and efficiency, and government regulations usually get in the way of that process working itself out.

Learning from History

As I’ve argued before, on fundamental questions we have to be guided by history. I used to think democracy was fatally flawed and America would be overtaken by China, but eventually changed my mind based on real world events. Anti-wokes need to take a step back and wonder why, as we’ve gone crazy with DEI, American society has been working so extremely well relative to the rest of the world.

The lack of evidence that DEI is destroying the country should be taken in alongside the very strong evidence that anti-market policies make nations poor. Here’s a graph that makes this point.

The countries with most negative years of growth since 1950 are, in order, Libya, Argentina, Syria, Iraq, Chad, Congo, Sudan, and Venezuela. This is not a list anyone wants to be on. That’s six countries in Africa and the Middle East that have experienced civil wars, and two Latin American nations that did it to themselves through leftist economic policies. The experiences of Europe and East Asia have been less extreme, but those countries tend to economically underperform relative to their IQs.

I think controversies around the airline industry demonstrate how regulations that have nothing to do with race and sex are often worse than those that do. Unlike DEI, which is a tax you can afford to pay or get around, hard mandates like the 1,500-hour rule place more tangible and less avoidable burdens on businesses. They also tend to be more popular, or ignored altogether. Companies know that if they go too far on DEI they are subject to a backlash, while basically no one is clamoring for the federal government to get rid of what has become the largest artificial barrier to training and hiring new pilots. DEI strikes many as an open assault on merit, while the 1,500-hour rule can falsely claim its mantle.

The only plausible case you can point to where something like wokeness or DEI arguably destroyed a nation is South Africa, but the demographics had to be really bad for that to happen. If we’re ever at the point where blacks make up 80% of America and whites are down to 7%, then wokeness could become as much of a threat as anti-trade and pro-union policies. And even South Africa is not nearly as big of an economic basket case as Argentina and Venezuela have been.

One concern I have with anti-wokeness is that on the right it is often correlated with anti-market sentiment. I believe that a lot of the new right-wing enthusiasm for labor unions is a way for individuals to signal that they are on the side of normal white people over the cosmopolitan forces of capital and their darker allies. Anti-trade sentiment is an even more obvious way for conservatives to show their nationalist bona fides. In practice, anti-immigration and anti-trade attitudes are strongly correlated, even though the only sensible arguments against immigration – that new groups increase crime, vote a certain way, and change the culture – have no relevance to the question of whether we should open our markets to foreign goods and services. Some people just want to signal their belief that foreigners are icky, which they can do by calling for government to ban all interactions with them. But being hostile to free trade is not a matter of favoring your own nation over others. Rather, it makes both your own country and foreign nations poorer in order to help a tiny minority of the population.

Some people just dislike woke for aesthetic reasons, and that’s fine. But those opposed to the movement based on the idea that it will destroy Western civilization should probably focus their attention elsewhere.

I agree directionally. But there are cases where it's best NOT to be sanguine about markets being a sufficient antidote to DEI.

i.e Industries or domains with loose feedback loops - The most obvious examples are places where markets aren't the primary resource allocation mechanism - bureaucracies or the military or academia - where job protection and lack of consumer feedback can take quite a while for underperformance to feed back into hiring processes. Wokeness in public health is a case in point (which arguably already caused thousands of incremental deaths ) and many more in expectation when the next pandemic comes around. But even within for-profit entities, you can have loose feedback loops, especially for junior employees who don't directly affect the bottom line (this is especially so if it's also culturally harder to fire minorities). But with junior employees, they're less likely to have real-word impact anyway, positive or negative. Over time however, it can create a talent bottleneck as these incompetent junior employees rise up the ranks, but not sure the problem is quite that bad anywhere yet.

More importantly, I think it's a mistake to consider DEI a static problem. DEI is much more like terrorism rather than malaria. Malaria doesn't necessarily get worse if we don't take action. It isn't encouraged by our inaction. Both terrorism and DEI are. So an EA style utilitarian analysis will underweight it's importance.

Having said that, I agree that conservatives spend way too much time on this and i'd be surprised if evne after accounting for the dynamic nabture of the problem, it features in my top 5. The biggest negative impact is probably going to be via damaging the credibility of signalling tools (like universities) but i'm not sure that'll be entirely bad.

I worked at Boeing for many years, in a senior role. Every person took safety seriously. I recall a meeting in 2003 where we forecasted by 2020 we would have solved for every redundancy and failure except for the very edge of human failure. That failures in the 2020 time frame would be one in 10Billion like probability. Our question in 2003 was how do we engineer around even those errors.

I raise this as an example to reflect how deeply Boeing thinks about its product and safety. No industry does the same. 250k people die each year in healthcare from medical errors. Imagine if we left airplane safety to human error.

I believe the 737 MAX MCAS accident would likely not have happened with a US pilot in the left seat. US pilots simply have more training. It is an engineers job to make sure flight safety though is not subject to human frailty. Engineering a solution to be immune to human error is likely easier in healthcare, which is abysmal, versus aerospace, which is exceptionally safe.

The recent error in the plug door is likely a failure to follow process. Procedure is pretty clear - the mechanic needs to stamp the manufacturing log that they tightened the bolts. And QA that they inspected it. The system is designed for an IQ of 90 with redundancy. Because Boeing, Spirit and FAA care about safety they will figure out what happened and correct it. The duty in aerospace is to engineer the systems to be safe no matter what humans do.